Jira for Non-Engineers: Practical Project Management Across Teams

At the company I worked for, a mid-sized FinTech organization with offices across Europe, the idea to create a course on Jira skills for non-engineers did not originate within the Learning & Development (L&D) department. Instead, it emerged from a series of frustrations shared over time by Heads of Departments and engineering staff. For these stakeholders, recurring challenges in cross-departmental collaboration, such as inefficient workflows, inconsistent reporting, and fragmented communication, highlighted the need to upskill a significant number of employees.

To address this, the Head of L&D proposed the idea of a crash course on Jira, which I was tasked with designing and developing.

The course was built primarily using Moodle LMS and its native H5P-powered interactive tools, with Canva, Camtasia, and Audiate used as supplementary design and media tools. It is hosted on the company’s learning management system (LMS), which is powered by Moodle.

Use of Theory & Concepts

I chose ADDIE as the core instructional design framework due to its focus on individual learners, a clear, structured approach, and ease of communication with stakeholders and SMEs, who are viewed as equal partners in the course creation process. Its emphasis on the Analysis phase aligned well with my analytically driven work style and allowed me to establish a solid foundation before progressing to course design.

I applied a blend of cognitivist and constructivist approaches; both are learner-centered paradigms, and hence are well-suited to asynchronous digital learning. Cognitivism informed the course’s structured layout, content chunking, and knowledge reinforcement strategies, while constructivism guided the inclusion of real-world applications, decision-making, problem-solving, and scenario-based learning tasks.

Bloom’s Taxonomy played a pivotal role in shaping the learning outcomes, ensuring alignment between content and assessment. The course structure was sequenced to scaffold from lower-order to higher-order thinking skills, providing both a sense of progression and cognitive challenge.

As a guiding design principle, I integrated Gagné’s Nine Events of Instruction, which is a model I have valued since my CELTA training. Designing learning with these principles has always seemed to me like a natural approach for crafting instruction that is engaging, motivating, intuitive, and effective.

Examples of how Gagné’s principles were implemented in the project:

Gaining learners’ attention through multimedia content

Clearly outlining goals and objectives for each module

Using recall-based quizzes

Providing scaffolding and downloadable resources

Incorporating low-/no-stakes questions with meaningful feedback

Offering diverse assessment formats, including auto-graded quizzes and rubrics

Gagné’s model is flexible and integrates seamlessly with Bloom’s Taxonomy. In addition, it complements the ADDIE model effectively.

📌 While Gagné’s sequence worked well overall, Jira’s exploratory nature occasionally required revisiting between the “Present Content” and “Provide Learning Guidance” stages. This might potentially be improved by breaking down some of the complex topics into even smaller, more manageable chunks to ensure consistent knowledge/skill adoption and retention.

During content creation, I also applied Mayer’s Principles of Multimedia Learning, a set of evidence-based guidelines rooted in cognitive psychology that promote effective learning and knowledge retention by aligning with how people process and organize information.

These principles guided my use of a combination of text/narration with visuals (video, infographics, and screenshots); segmentation of content into manageable units; alignment of visuals with accompanying text; and the use of signaling elements (e.g., arrows, highlights) to emphasize key points.

Rollout Plan

The Rollout Plan provided a high-level summary of the What’s and the How’s of the project, with a particular focus on LMS integration, staffing, go-live plan, and learner engagement tactics.

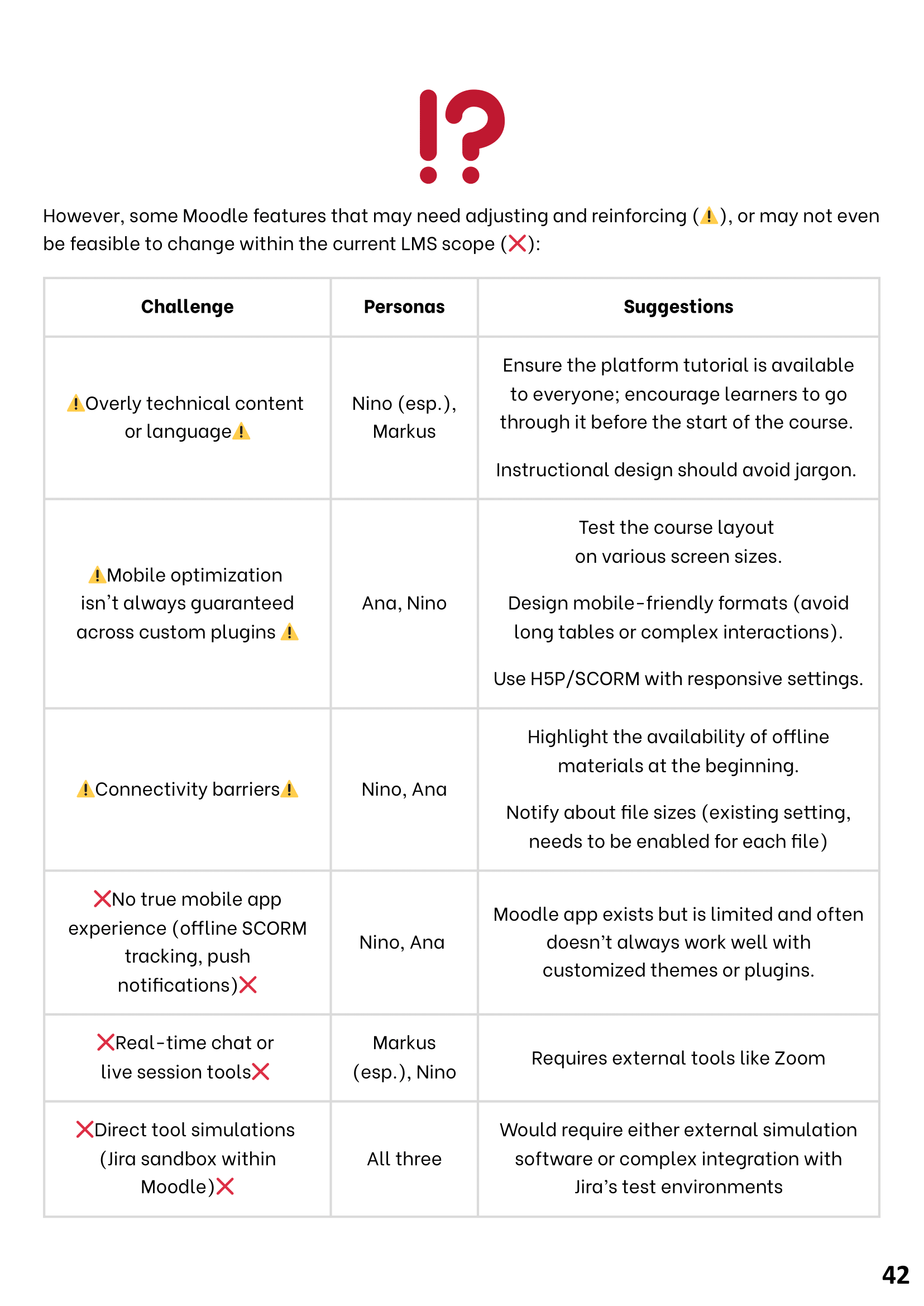

The use of Moodle was non-negotiable, as it is the company’s existing LMS. This presented certain limitations (e.g., limited mobile optimization, use of platform-specific jargon), but also offered key advantages (e.g., plugin-improved intuitive UI, forums, and downloadable content). I worked to mitigate the constraints while maximizing the strengths of the platform with its current setup.

Similarly, limited staff and resources posed several challenges that had to have been taken into account since the early stages of Analysis and Design to make provisions (e.g., securing access to a premium Canva account, which was already available within the team and was necessary to maintain design consistency). These challenges highlighted several considerations for future initiatives, such as the importance of scheduling regular SME synchronizations.

Evaluation Plan

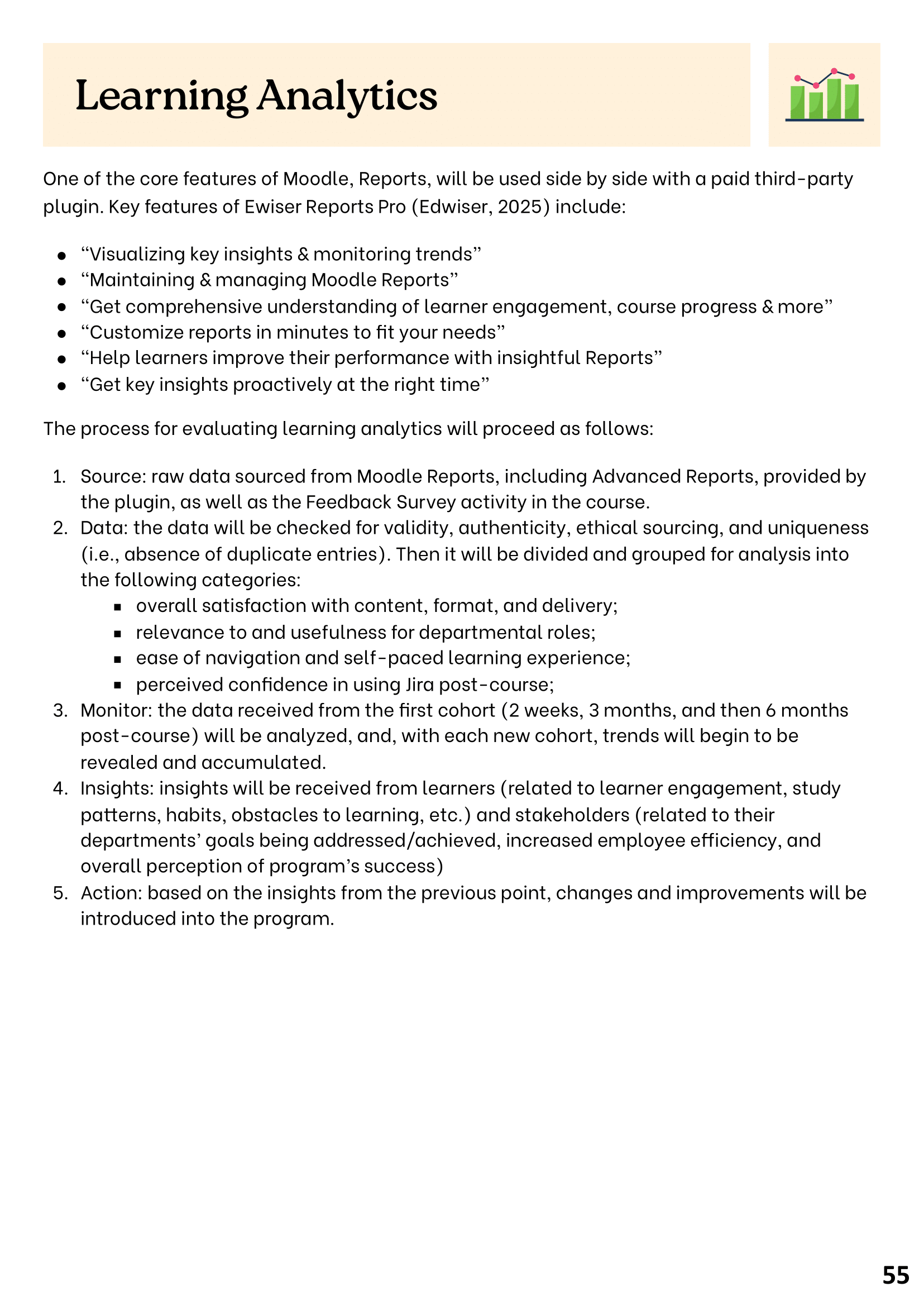

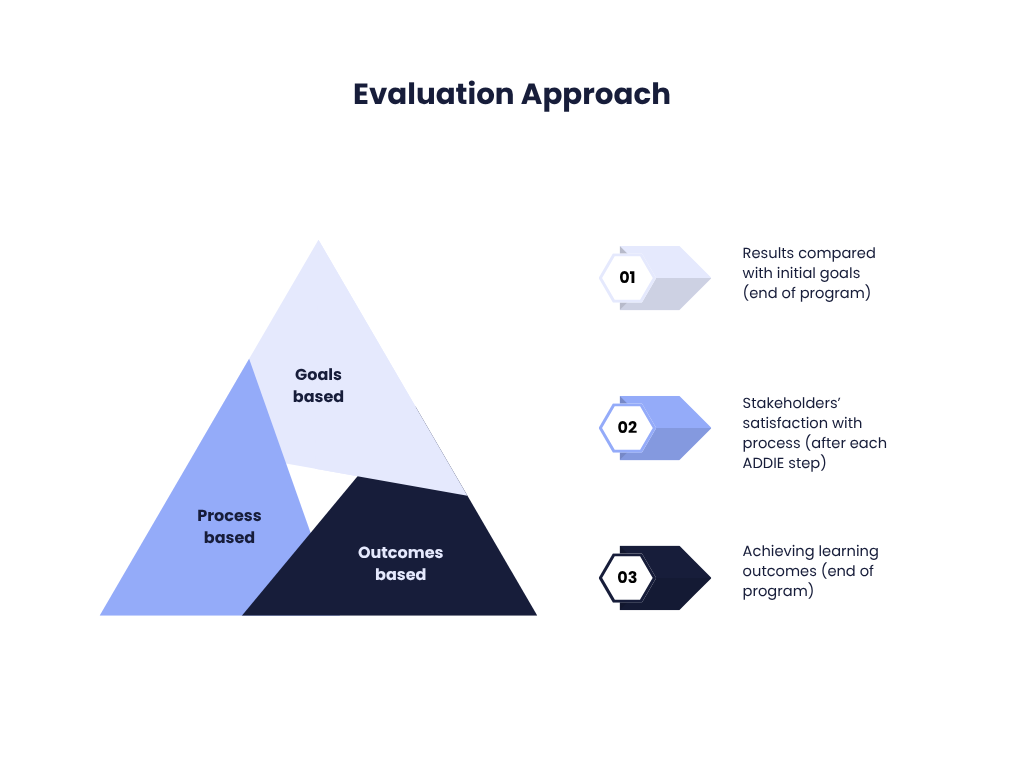

The Evaluation Plan encompassed multiple approaches (goals-, process-, and outcomes-based), aligned with Kirkpatrick’s Four Levels of Evaluation (Reaction, Learning, Behavior, Results). Among the most critical KPIs identified were a reduction in Jira support tickets and increased tool usage metrics.

Another key focus was accessibility evaluation. While our company’s awareness of accessibility is gradually growing, it currently applies mainly to customer-facing software, with internal content often falling behind. As a result, many internal accessibility processes and rules had to be created from scratch. Though this required additional time, the resulting frameworks are likely to streamline future efforts.

Learning analytics also proved essential in our context. As our department was undergoing structural changes, the ability to model and test data collection workflows for this course provided valuable, concrete insights. It was used to save us from having to introduce a lot of changes in the future that could have resulted from relying solely on theory or anecdotal recollection.

Background & Strategy

The program is to be evaluated based on the following approaches:

Goals-based evaluation, where the results of the digital learning are compared with the initial goals based on how they address business needs/problems. Conducted at the end of the program.

Process-based evaluation, where we assess stakeholders’ satisfaction with each step of the ADDIE process. For Analysis, Design, and Development - conducted after the development stage is finished, for the Implementation stage - after the stage is over.

Outcomes-based evaluation, where we focus on achieving learning outcomes. Conducted at the end of the program based on the Kirkpatrick model, which looks at how learners’ knowledge, skills, and behaviors changed as a result of the course.

As the evaluation strategy, I used Kirkpatrick’s Four Levels of Evaluation, which is used to assess how learners’ knowledge, skills, and behaviors have changed as a result of the course. This was achieved by outlining evaluation goals, data (what to evaluate), evaluation methods, and timelines (when to evaluate) at each of the four levels.

KPIs to connect Levels 3 and 4 of the Kirkpatrick Four Levels of Evaluation:

Increase in Jira usage metrics (such as number of active projects, dashboards created).

Decrease in Jira-related support tickets from non-technical teams.

Increase in cross-departmental projects managed fully in Jira, rather than hybrid.

Reduction in project setup time.

Kirkpatrick’s Four Levels of Evaluation

Level One - Reaction

A feedback form to be provided for participants to fill in at the end of the program as the final step, before providing the learners with the certificates of completion.

Goal: Measure learners’ satisfaction, perceived relevance, and engagement with the course.

What to evaluate:

Overall satisfaction with content, format, and delivery.

Relevance to and usefulness for their departmental roles.

Ease of navigation and a self-paced learning experience.

Perceived confidence in using Jira post-course.

Methods:

Post-course survey (on Moodle).

Quick star rating to capture gut reactions.

Likert-scale items (e.g., "The course content was relevant to my daily tasks" — strongly disagree to strongly agree).

Open-ended questions (e.g., "What did you find most useful?", "What could be improved?").

When to evaluate: 1-2 weeks post-course

Level Two - Learning

At the end of each module and as a final course assessment, the learners to be given various assessments that were created based on learning outcomes.

Goal: assess knowledge and skills gained as a result of the course.

What to evaluate:

Ability to navigate and customize the Jira interface.

Understanding of Jira structure (projects, dashboards, issue types).

Correct creation and management of tickets.

Workflow customization ability.

Reporting and dashboard design skills.

Ability to identify issues and propose integrations.

Methods:

Embedded module quizzes (scenario-based, task-oriented).

Practical assignments (such as: create 3 tickets with different types and correct configuration - module 3, modify a sample workflow for a specific department need - module 5, propose an integration relevant to your department and explain the expected benefit - module 8)

End-of-course assessment simulating a realistic task (create and customize a project to reflect one of your department’s processes).

When to evaluate: during the course

Level Four - Results

Goal: measure organizational or departmental impact of training.

What to evaluate:

Increased project visibility and cross-departmental coordination.

Reduction in email chains or reliance on spreadsheets for task tracking.

Higher rate of on-time project deliveries.

Fewer Jira-related support requests to technical teams.

Methods:

Department-level metrics (e.g., number of projects initiated and maintained in Jira).

Feedback from cross-functional project leads on collaboration improvements.

Comparison of pre- and post-training tool adoption rates.

Reduction in tool-related issues or escalations reported to the helpdesk (as reported by them based on analytics).

When to evaluate: 3–6 months post-course, aligning with business reporting cycles.

Level Three - Behaviors

After completing the program, the Line Managers of the participants to be asked to assess the changes in skills and knowledge when using Jira for the needs of the departments.

Goal: evaluate whether learners apply new Jira skills on the job.

What to evaluate:

Increased independent use of Jira (less reliance on colleagues).

Improved setup and management of departmental projects.

Effective use of comments, mentions, and dashboards in real-world tasks.

Application of new workflows and reporting in team practices.

Methods:

Manager feedback survey, ~1–2 months post-course (e.g., "Have you observed increased confidence and competence in Jira?").

Self-reflection survey for learners (e.g., "Which Jira features have you started using regularly?").

Informal interviews.

Jira usage analytics — increase in project updates, new dashboards created (reports will be requested from managers).

When to evaluate: 2-3 months post-course (first cohort) to allow time for behavioral change.

Personal Learning & Reflections

This project deepened my understanding of the distinct role of a Learning Experience Designer, particularly when compared to that of an Instructional Designer. Since this was also my graduation project for my program at Digital Learning Institute, I was able to benefit from the structured procedures and templates provided and organized for the learners. The strategic approach to course creation I employed aligns well with my values and passion for regulating all the processes. It certainly reinforced the importance of upfront planning and thoughtful design by showing how it can add substance and depth to the course, ultimately resulting in more meaningful and effective learning.

The Jira for Non-Engineers course significantly enhanced my skills in stakeholder communication, especially when aligning course outcomes with business goals across multiple departments. Additionally, the project enhanced my practical knowledge of accessibility, especially how to apply best practices within technically constrained environments.

One of the most fulfilling aspects of this journey was designing realistic, relatable scenarios that made Jira feel approachable and relevant for non-technical staff. I personally remember struggling with Jira myself when I first encountered it, as well as having to help my colleagues from L&D master all the functionalities and quirks. Transforming those challenges into accessible, intuitive content for others (in a structured and formal way) felt both motivating and meaningful. This experience reminded me why I am passionate about learning design: helping people solve real problems through thoughtful experiences.

This project provided valuable organizational and practical insights that will directly inform my future course development efforts. Having such a structured and detailed framework helps plan ahead and anticipate a lot of potential difficulties and challenges. This was my first time applying the knowledge gained in the Digital Learning Design program, and the process demonstrated the practical relevance of those new skills.

Moreover, this project coincided with a major transformation in the department structure and employee responsibilities, as well as workflow and policies. The project became instrumental in establishing a baseline and creating the necessary templates for our future projects, potentially for years to come.

I was able to make what I have learnt an integral part of the course creation and delivery processes in the company. Aside from this being a convenient opportunity to set the processes in the way I know and prefer, assisting the Head of Department in policy-making was, in general, a meaningful and strategic addition to my resume that should help develop my career in LX Design. It confirmed my ability to embed best practices into the learning design processes at scale.